Artificial Intelligence (AI) has been a subject of fascination and speculation for centuries, but its journey from theoretical concept to practical application is a testament to human ingenuity and persistence.

In this blog, we'll delve into the rich AI history, exploring its origins, major milestones, and the ethical and societal implications of its development.

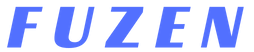

AI history: from Turing to today

Early concepts and influences

Before the term "Artificial Intelligence" was coined in the 20th century, the idea of creating artificial beings was prevalent in myths, folklore, and religious texts. From the ancient Greek myth of Pygmalion to the Jewish legend of the Golem, stories of humans creating life from non-life captured the imagination of people throughout history.

However, it was Alan Turing, a British mathematician and computer scientist, who laid the foundation for modern AI with his groundbreaking work on computation and logic.

In the 1930s and 1940s, Turing introduced the concept of a "universal machine," later known as the Turing machine, which could perform any computation that could be described algorithmically.

Turing's seminal paper, "Computing Machinery and Intelligence," published in 1950, proposed the famous Turing Test as a criterion for determining whether a machine exhibits intelligent behavior.

This work not only provided a theoretical framework for AI but also sparked debates about the nature of intelligence and the possibility of creating thinking machines.

The Dartmouth conference and the birth of AI

The Dartmouth Conference, held in the summer of 1956, is widely regarded as the birth of AI as a formal field of study.

Organized by John McCarthy, Marvin Minsky, Allen Newell, and Herbert Simon, the conference brought together leading scientists and mathematicians to explore the potential of machines to simulate human intelligence.

The participants discussed ambitious goals, including natural language processing, problem-solving, and machine learning, laying the groundwork for decades of research and development in AI.

Among the key contributions of the Dartmouth Conference was the proposal of a research agenda for AI, which outlined specific tasks and challenges to be addressed by researchers in the field.

This agenda served as a guiding framework for early AI research and played a crucial role in shaping the direction of the field in its formative years.

Early AI milestones

Following the Dartmouth Conference, AI researchers made rapid progress in developing computer programs capable of performing tasks traditionally associated with human intelligence.

One of the earliest successes was the Logic Theorist, developed by Allen Newell and Herbert Simon in 1956, which could prove mathematical theorems using symbolic logic. This groundbreaking achievement demonstrated the potential of AI to automate complex reasoning tasks previously thought to require human intelligence.

Another milestone in early AI research was the development of neural networks, inspired by the structure and function of the human brain.

Frank Rosenblatt's Perceptron, introduced in the late 1950s, was the first computational model of a neural network capable of learning from experience. However, enthusiasm for neural networks waned in the 1960s following criticisms by Marvin Minsky and Seymour Papert, whose book "Perceptrons" highlighted the limitations of single-layer networks in solving complex problems.

AI summers and winters

The history of AI is characterized by cycles of optimism and disillusionment, often referred to as "AI summers" and "AI winters."

The first AI winter occurred in the 1970s and 1980s, as early AI systems failed to live up to their lofty expectations and funding for AI research dried up. The field experienced a resurgence in the 1990s with the advent of expert systems, which demonstrated AI's potential in specialized domains such as medicine, finance, and engineering.

However, this renewed optimism was short-lived, as another AI winter followed in the early 2000s amid overhyped expectations and underwhelming results from AI technologies such as autonomous vehicles and natural language processing.

Breakthroughs in modern AI

The turn of the 21st century witnessed a renaissance in AI research, fueled by advances in machine learning, big data, and computational power. One of the most remarkable breakthroughs during this period was the development of OpenAI's GPT (Generative Pre-trained Transformer) models, particularly GPT-3, and the groundbreaking image generation model DALL-E. Here are some benefits of generative AI you should look at.

The rise of AI also sparked renewed interest in robotics, with autonomous robots being deployed in factories, warehouses, and even households to perform tasks ranging from assembly and logistics to cleaning and caregiving.

The Future of AI

Looking ahead, the future of AI holds both promise and peril. On one hand, AI has the potential to revolutionize fields ranging from healthcare to environmental conservation.

On the other hand, there are legitimate concerns about the concentration of power in the hands of a few tech giants, as well as the potential for AI to exacerbate existing social inequalities. As we navigate this uncertain terrain, it is crucial to approach AI development with caution, humility, and a commitment to ethical principles.

Conclusion

The AI history is a testament to human curiosity, creativity, and perseverance. From the theoretical musings of Alan Turing to the real-world applications of modern machine learning, AI has come a long way in a relatively short span of time. As we reflect on the past and look to the future, let us remember that the journey from Turing to today is just the beginning of a much larger story yet to unfold.

However, as AI technology continues to advance, so do the concerns about its ethical and societal implications. Issues such as job displacement, algorithmic bias, privacy infringement, and autonomous weapons have become hot topics of debate among policymakers, ethicists, and technologists.

Addressing these challenges requires a multidisciplinary approach that brings together experts from diverse fields, including computer science, ethics, law, sociology, and philosophy. Efforts to develop ethical guidelines, regulatory frameworks, and governance mechanisms for AI are underway, but much work remains to be done to ensure that AI technologies are developed and deployed in a responsible and socially beneficial manner.